This series is about CUDA C programming guide. The post is chapter one.

Four parts are included.

- from graphics processing to general purpose parallel computing

- CUDA - A General-Purpose Parallel Computing Platform and Programming Model

- A scalable programming Model

- document structure

From Graphics Processing to General Purpose Parallel Computing

Driven by the insatiable market demand for realtime, high-definition 3D graphics,

the programmable Graphic Processor Unit or GPU has evolved into a highly parallel,

multithreaded, manycore processor with tremendous computational horsepower and

very high memory bandwidth.

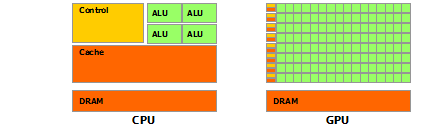

The reason behind the discrepancy in floating-point capability between the CPU and the GPU is that the GPU is specialized for highly parallel computation - exactly what graphics rendering is about - and therefore designed such that more transistors are devoted to data processing rather than data caching and flow control, as schematically illustrated by Figure 3.

This conceptually works for highly parallel computations because the GPU can hide memory access latencies with computation instead of avoiding memory access latencies through large data caches and flow control.

Data-parallel processing maps data elements to parallel processing threads. Many applications that process large data sets can use a data-parallel programming model to speed up the computations. In 3D rendering, large sets of pixels and vertices are mapped to parallel threads. Similarly, image and media processing applications such as

post-processing of rendered images, video encoding and decoding, image scaling, stereovision, and pattern recognition can map image blocks and pixels to parallel processing threads. In fact, many algorithms outside the field of image rendering and processing are accelerated by data-parallel processing, from general signal processing or physics simulation to computational finance or computational biology.

CUDA - A General-Purpose Parallel Computing Platform and Programming Model

In November 2006, NVIDIA introduced CUDA®, a general purpose parallel computing platform and programming model that leverages the parallel compute engine in NVIDIA GPUs to solve many complex computational problems in a more efficient way than on a CPU.

CUDA comes with a software environment that allows developers to use C++ as a highlevel programming language. As illustrated by Figure 4, other languages, application programming interfaces, or directives-based approaches are supported, such as FORTRAN, DirectCompute, OpenACC.

A scalable programming Model

The advent of multicore CPUs and manycore GPUs means that mainstream processor chips are now parallel systems. The challenge is to develop application software that transparently scales its parallelism to leverage the increasing number of processor cores, much as 3D graphics applications transparently scale their parallelism to manycore GPUs with widely varying numbers of cores.The CUDA parallel programming model is designed to overcome this challenge while maintaining a low learning curve for programmers familiar with standard programming languages such as C.

At its core are three key abstractions - a hierarchy of thread groups, shared memories, and barrier synchronization - that are simply exposed to the programmer as a minimal set of language extensions.

These abstractions provide fine-grained data parallelism and thread parallelism, nested within coarse-grained data parallelism and task parallelism. They guide the programmer to partition the problem into coarse sub-problems that can be solved independently in parallel by blocks of threads, and each sub-problem into finer pieces that can be solved cooperatively in parallel by all threads within the block.

A GPU is built around an array of Streaming Multiprocessors (SMs) (see Hardware Implementation for more details). A multithreaded program is partitioned into blocks of threads that execute independently from each other, so that a GPU with more multiprocessors will automatically execute the program in less time than a GPU with fewer multiprocessors.

document structure

This document is organized into the following chapters:

- Chapter Introduction is a general introduction to CUDA.

- Chapter Programming Model outlines the CUDA programming model.

- Chapter Programming Interface describes the programming interface.

- Chapter Hardware Implementation describes the hardware implementation.

- Chapter Performance Guidelines gives some guidance on how to achieve maximum performance.

- Appendix CUDA-Enabled GPUs lists all CUDA-enabled devices.

- Appendix C++ Language Extensions is a detailed description of all extensions to the C++ language.

- Appendix Cooperative Groups describes synchronization primitives for various groups of CUDA threads.

- Appendix CUDA Dynamic Parallelism describes how to launch and synchronize one kernel from another.

- Appendix Mathematical Functions lists the mathematical functions supported in CUDA.

- Appendix C++ Language Support lists the C++ features supported in device code.

- Appendix Texture Fetching gives more details on texture fetching

- Appendix Compute Capabilities gives the technical specifications of various devices, as well as more architectural details.

- Appendix Driver API introduces the low-level driver API.

- Appendix CUDA Environment Variables lists all the CUDA environment variables.

- Appendix Unified Memory Programming introduces the Unified Memory

programming model.